Chapter 2: Review of the Literature

As critical attention on postsecondary student outcomes and program evaluation continues to increase, Research & Planning (R&P) already plays a pivotal role in producing high-quality research. Nationally, most recent studies use observational data to estimate correlations between variables such as degree level and earnings. However, the extensive data to which R&P has access allows for high quality causational studies.

This chapter analyzes several questions, including, “Why is program evaluation important?,” “What does past research show?,” “Why is research needed for Wyoming students?,” and “What is the best way to evaluate student outcomes?”

Why is Program Evaluation Important?

Evaluating the effectiveness of employment and training initiatives serves both policy makers and program customers. Outcome reports can provide the information policy makers need to better direct government resources of time and money. Knowing a program’s outcomes can also allow customers to make informed decisions about participation and realistic assumptions about their results.

The history of legislation regarding program evaluation goes back several decades. In 1993, the federal government passed the Government Performance and Results Act, an act that was modernized in 2011 (Lew & Zients, 2011). In 2010, the federal Office of Management and Budget released the memorandum, “Evaluating Programs for Efficacy and Cost-Efficiency,” which stated that the Office had “allocated approximately $100 million to support 35 rigorous program evaluations” (Orszag, 2010). At the state level, the Wyoming Legislature’s Management Audit Committee has focused on program evaluation since 1988, in “response to legislators’ demands for independent, thorough analysis of program performance and related policy issues” (http://legisweb.state.wy.us/LSOWEB/ProgramEval/ProgramEval.aspx). Currently, the Wyoming Department of Education requires institutions of higher education to report student data “for the purposes of policy analysis and program evaluation” in order to be eligible to receive state scholarship funds (WY Stat § 21-16-1308, c.(2015)).

What Does Past Research Show?

Postsecondary student outcomes is a popular research topic. Journals, including the Economics of Education Review, and offices such as the Center for Analysis of Postsecondary Education and Employment (http://capseecenter.org/) and the Center for Postsecondary and Economic Success (http://www.clasp.org/issues/postsecondary) produce large amounts of research on the subject. Past studies have often used observational techniques to compare high school graduates to postsecondary graduates. Generally, observational studies have found that:

- college graduates are less likely to be unemployed;

- award completion, as opposed to an accumulation of credits, is key to higher earnings;

- youth from higher income families are more likely to go to college;

- the difference in earnings between high school graduates and college graduates is greater for women than it is for men;

- college grads have better health; and

- self-selection limits observational studies and experiments (Oreopoulous & Petronijevic, 2013).

Observational studies can imply correlations, but many do not account for parents’ education and test scores, among other factors, and therefore cannot isolate the impact of, for example, the Hathaway Scholarship Program. When compared to observational studies of postsecondary outcomes, statistically sound causational studies require a much greater amount of high quality data than most researchers have. A thorough review of 38 academic papers titled “Summary of Research on Effects of Community College Attendance on Earnings” (Liddicoat & Fuller, 2012) found only one paper examining the causal effects of community college enrollment (Miller, 2007). The quality and extent of data to which R&P has access is difficult for other researchers to match, and R&P has been able to produce several causal impact analyses, most recently “Higher Wages and More Work: Impact Evaluation of a State-Funded Incumbent Worker Training Program” by Patrick Manning (2016). Wyoming is in a unique situation because R&P has built one of the most extensive, high quality databases in the country. R&P has data sharing relationships with 11 states, over a dozen Wyoming state health care boards, and the Wyoming Departments of Education and Transportation. More information on R&P’s formal partnerships can be found at http://doe.state.wy.us/LMI/LMIinfo.htm. Impact studies of statewide merit-based scholarships on future in-state labor force participation are even rarer than causal studies related to postsecondary student earnings. However, one analysis found that Missouri’s highly selective Bright Flight Scholarship, covering about 40% of the University of Missouri-Columbia’s tuition, increased labor force participation by 4.3% eight years after graduation. The authors suggest that a higher value scholarship could have a greater effect on in-state labor force participation. (Harrington et al., 2015).

Why is Research Needed for Wyoming Students?

|

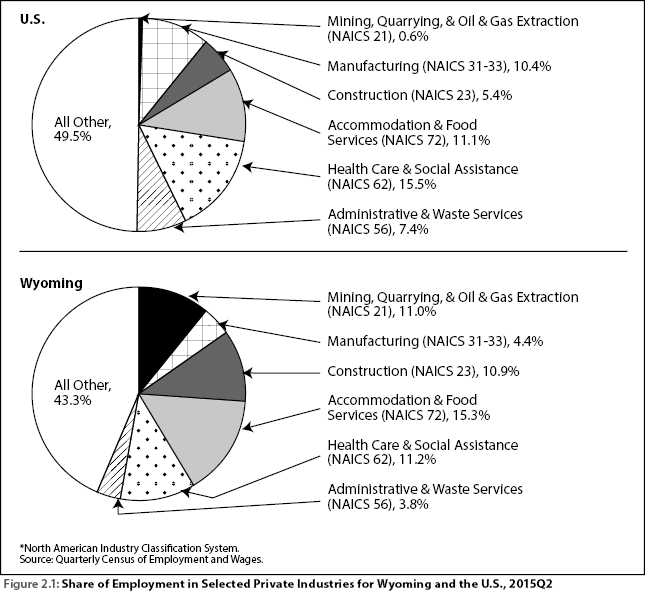

While previous research is important to keep in mind, the extent to which other findings can be applied to Wyoming is limited because of the state’s distinct population and economic characteristics, number of postsecondary institutions, and the Hathaway Scholarship Program. Wyoming is the least populated state with the second lowest population density and the 13th most rural population in the United States (U.S. Census Bureau). Wyoming’s economy departs from the national average in several significant ways. First, a Wyoming resident is 17 times more likely to work in mining, quarrying, and oil and gas than the national average, and twice as likely to work in construction (Bureau of Labor Statistics, 2015). Second, manufacturing, health care & social assistance, and administration & waste services play a much smaller role in Wyoming’s economy than for the nation as a whole (Bureau of Labor Statistics, 2015; see Figure 2.1). As of the 2014-2015 school year, the National Center for Education Statistics listed Wyoming as the state with the fewest postsecondary degree-granting institutions (10). Wyoming has only one public four-year university and as of 2010, is one of 14 states with a merit-based scholarship program (Zhang & Ness, 2010). These demographic and educational differences create a need for Wyoming-specific student outcome research.

What is the Best Way to Evaluate Student Outcomes?

Evaluating student outcomes is not a straightforward process. Defining student success, gathering the appropriate data, tailoring research to the local environment, accounting for all the pathways to and from postsecondary education, and creating a comparison group all compound the problem of program evaluation. Many program evaluations define a successful student as one who receives a diploma and earns higher wages following graduation (Benson, Esteva, & Levy, 2015; Belfield, Liu, & Trimble, 2014; Jepsen, Troske & Coomes, 2014; Carneiro, Heckman, & Vytlacil, 2010; Bailey, Kienzl, Marcotte, 2004), but defining success in these limited terms has drawbacks. As discussed by Oreopolos and Salvanes in their 2011 paper “Priceless: The Nonpecuniary Benefits of Schooling,” students can use their education to secure a more comfortable job, reduce their risk of unemployment, work fewer hours for the same pay, improve a specific skill set without receiving a diploma, or form more stable households. These alternative measures of success are more ambiguous than earnings and they are only the beginning. Papers further discussing these measures of success include the Pew Research Center’s 2014 paper “The Rising Cost of Not Going to College,” (Taylor, et al., 2014) and the Annual Review of Sociology’s 2012 article “Social and Economic Returns to College Education in the United States” (Hout, 2012).

Beyond defining success, data collection is a complicated, ongoing process. Data sharing agreements with schools and other government departments require negotiations and updates; program goals and awards change over time. Data collection methods vary by institution, as do the data suppression requirements and data accuracy. R&P’s preferred data comes from administrative records, which can hold biases constant throughout the data set, but even thorough administrative records cannot account for all variables. For example, unemployment insurance wage records show quarterly wages, but generally do not show how many hours one had to work for those wages — a critical part of estimating student outcomes and a variable R&P is working hard to estimate. Even with a good data set, which can take years to build, how do administrative records measure all the social benefits of a postsecondary education?

Making student outcome research relevant requires more than just a description of how many people graduated and how much money they made. Statistically sound research requires creating a comparison group and holding factors like age and program of study constant. Creating an appropriate comparison group is a priority for R&P, and the statistical processes and software that allow for complex control group formation are evolving (Middleton & Aronow, 2012; Steiner, Cook, Shadish, & Clark, 2010; Sekhon, 2009). For more information on control groups, see R&P’s 2002 article “Compared to What? Purpose and Method of Control Group Selection” by Tony Glover.

Finally, student outcomes must be put into the context of both the local economy and the program’s goals (Cielinski, 2015). For example, if graduates of a program have a higher unemployment rate than the national average, is the program considered ineffective? Or does the research consider the local unemployment rate and the social need for an occupation in the area? Does the program measure success in terms of the rate of graduation? Or is it important to also access the rate at which those graduates get jobs in a certain area? How will program goals change during an economic downturn when more students enroll in postsecondary education (Fain, 2014)? While research discussed in this article can give Wyoming policy makers an example of what kind of results to expect and how results can be measured, there is no national research that can put outcomes of Wyoming students into the context of the local economy and specific program goals.

Conclusion

Program evaluation provides important information for both policy makers and the public on how to invest limited resources. Assessing student outcomes is complicated and can involve dozens of variables, but Research & Planning has the ability to measure the causal relationship between education and postsecondary outcomes.

References

Bailey, T., Kienzl, G., & Marcotte, D. E. (2004, August). The return to a sub-baccalaureate education: The effects of schooling, credentials and program of study on economic outcomes. In U.S. Department of Education. Retrieved from http://tinyurl.com/hath2a

Belfield, C., Liu, Y., & Trimble, M. J. (2014, March). The medium-term labor market returns to community college awards: Evidence from North Carolina. In Center for Analysis of Postsecondary Education and Employment. Retrieved from http://capseecenter.org/wp-content/uploads/2014/09/1D-Liu-CAPSEE-091814.pdf

Benson, A., Esteva, R., & Levy, F. S. (2015, January 26). Dropouts, taxes and risk: The economic return to college under realistic assumptions. In Social Science Research Network. Retrieved from http://tinyurl.com/hath2c

Bureau of Labor Statistics, U.S. Department of Labor, Quarterly Census of Employment and Wages. (2015). Retrieved April 28, 2016 from http://www.bls.gov/qcew/

Carneiro, P., Heckman, J. J., & Vytlacil, E. (2011). Estimating marginal returns to education. American Economic Review, 101(6), 81-2754. doi:10.1257/aer.101.6.2754

Cielinski, A. (2015, October). Using post-college labor market outcomes policy challenges and choices. In Center for Postsecondary and Economic Success at CLASP. Retrieved from http://tinyurl.com/hsp2f

Fain, P. (2014, November 18). Recession and Completion. In Inside Higher Ed. Retrieved from http://tinyurl.com/hath2d

Glover, T. (2002, June). Compared to what? Purpose and method of control group selection [Electronic version]. Wyoming Labor Force Trends, 39(6), 9-16. Retrieved from http://doe.state.wy.us/LMI/0602/a2.htm

Harrington, J. R., Muñoz, J., Curs, B. R., & Ehlert, M. (2015, October). Examining the impact of a highly targeted state administered merit aid program on brain drain: Evidence from a regression discontinuity analysis of Missouri’s Bright Flight program [Electronic version]. Research in Higher Education. doi: 10.1007/s11162-015-9392-9

Hout, M. (2012, July). Social and economic returns to college education in the United States [Electronic version]. Annual Review of Sociology, 38(1). doi:10.1146/annurev.soc.012809.102503

Jepsen, C., Troske, K., & Coomes, P. (2013, December). The labor-market returns to community college degrees, diplomas, and certificates [Electronic version]. Journal of Labor Economics, 32(1). doi:10.1086/671809

Lew, J., & Zienst, J. (2011, April 14). Delivering on the Accountable Government Initiative and implementation of the GRPA Modernization Act of 2010 [Memorandum]. Washington, DC: Executive Office of the President, Office of Management and Budget. Retrieved from http://tinyurl.com/hath2e

Liddicoat, C., & Fuller, R. (2012). Summary of research on effects of community college attendance on earnings. In California Community Colleges Chancellor’s Office. Retrieved from http://tinyurl.com/hath2f

Manning, P. (2016, January). Higher wages and more work: Impact evaluation of a state-funded incumbent worker training program [Electronic version]. Wyoming Labor Force Trends, 53(1), 1, 3-21. Retrieved from http://doe.state.wy.us/LMI/trends/0116/a1.htm

Middleton, J., & Aronow, P. (2013). A Class of Unbiased Estimators of the Average Treatment Effect in Randomized Experiments. Journal of Causal Inference. 1(1): 135–144. doi: 10.2139/ssrn.1803849

Miller, D. (2007). Summary of research on effects of community college attendance on earnings. In Stanford Institute for Economic Policy Research. Retrieved from http://tinyurl.com/hath2g

National Center for Education Statistics. (2014, Fall). Table 317.20. Degree-granting postsecondary institutions, by control and level of institution and state or jurisdiction: 2014-15. In Digest of Education Statistics: 2015. Retrieved from http://tinyurl.com/hath2h

Oreopoulos, P., & Petronijevic, U. (2013, Spring). Making college worth it: A review of the returns to higher education. Postsecondary Education in the United States, 23(1), 41-65. Retrieved from http://tinyurl.com/hath2i

Oreopoulos, P., & Salvanes, K. G. (2011, Winter). Priceless: The nonpecuniary benefits of schooling [Electronic version]. Journal of Economic Perspectives, 25(1), 84-159. doi:10.1257/jep.25.1.159

Orszag, P. (2010, July 29). Evaluating programs for efficacy and cost-efficiency [Memorandum]. Washington, DC: Executive Office of the President, Office of Management and Budget. Retrieved from http://tinyurl.com/hath2j

Sekhon, J. (2009, September). The Neyman-Rubin model of causal inference and estimation via matching methods. The Oxford Handbook of Political Methodology. doi:10.1093/oxfordhb/9780199286546.003.0011

Steiner, P. M., Cook, T. D., Shadish, W. R., & Clark, M. H. (2010). The importance of covariate selection in controlling for selection bias in observational studies. Psychological Methods, 15(3), 67-250. doi:10.1037/a0018719

Taylor, P., Parker, K., Morin, R., Fry, R., Patten, E., & Brown, A. (2014, February 11). The rising cost of not going to college. In Pew Research Center. Retrieved from http://tinyurl.com/hath2k

U.S. Census Bureau. (2015, December). Annual Estimates of the Resident Population for the United States, Regions, States, and Puerto Rico: April 1, 2010 to July 1, 2015 (NST-EST2015-01). Retrieved April 28, 2016 from http://www.census.gov/popest/

U.S. Census Bureau. (n.d.) 2010 Census data: Resident population data (Text version). Retrieved April 28, 2016 from http://tinyurl.com/hath2m

U.S. Census Bureau. (n.d.). Percent urban and rural in 2010 by state. WY Stat § 21-16-1308, c. (2015). Retrieved April 28, 2016 from http://www2.census.gov/geo/docs/reference/ua/PctUrbanRural_State.xls

Zhang, L., & Ness, E. (2010, June). Does state merit-based aid stem brain drain? [Electronic version]. Educational Evaluation and Policy Analysis, 32(2), 143-165. doi:10.3102/0162373709359683